MaskSketch: Unpaired Structure-guided Masked Image Generation

Dina Bashkirova Jose Lezama Kihyuk Sohn Kate Saenko Irfan Essa

Boston University Google Research Georgia Tech

Paper Code Video

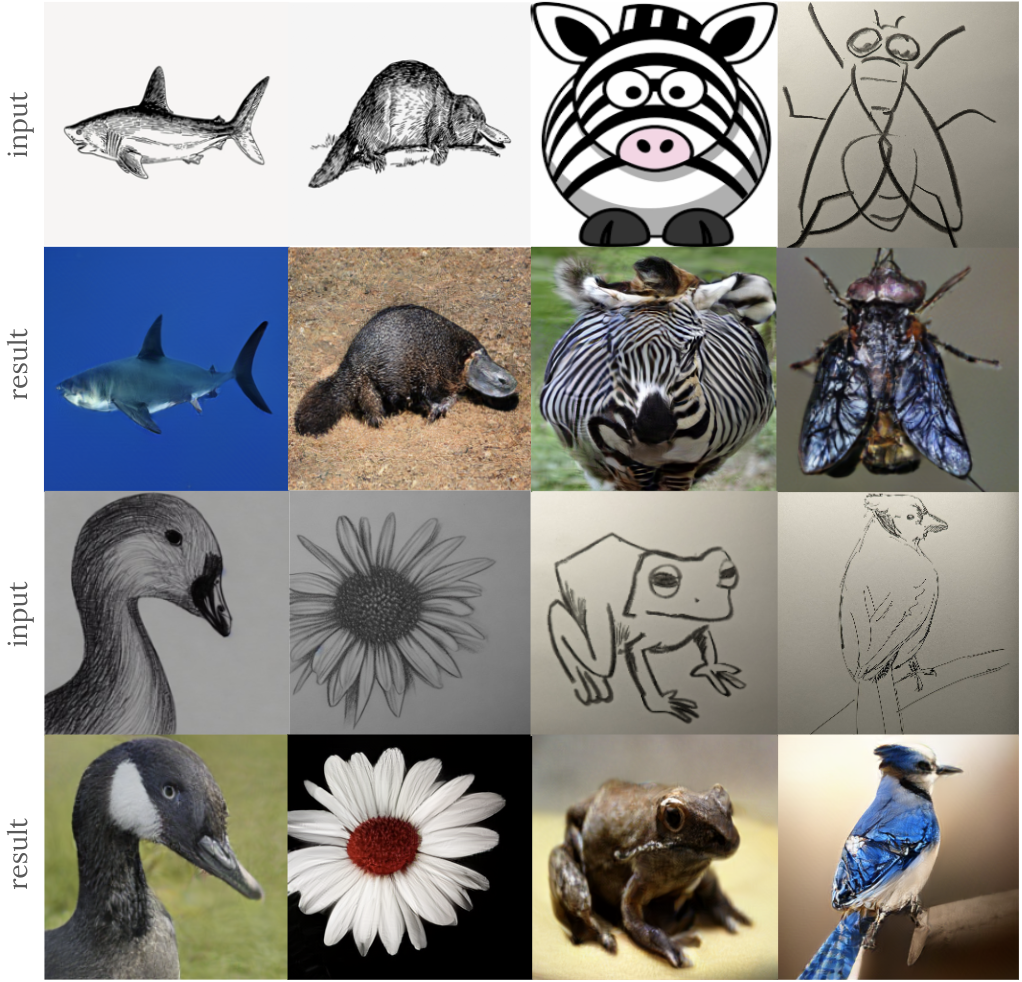

Given an input sketch and its class label, MaskSketch samples realistic images that follow the given structure. MaskSketech works on sketches of various degrees of abstraction by leveraging a pre-trained masked image generator, while not requiring model finetuning or pairwise supervision.

Abstract

Recent conditional image generation methods produce images of remarkable diversity, fidelity and realism. However, the majority of these methods allow conditioning only on labels or text prompts, which limits their level of control over the generation result. In this paper, we introduce MaskSketch, an image generation method that allows spatial conditioning of the generation result using a guiding sketch as an extra conditioning signal during sampling. MaskSketch utilizes a pre-trained masked generative transformer, requiring no model training or paired supervision, and works with input sketches of different levels of abstraction. We show that intermediate self-attention maps of a masked generative transformer encode important structural information of the input image, such as scene layout and object shape, and we propose a novel sampling method based on this observation to enable structure-guided generation. Our results show that MaskSketch achieves high image realism and fidelity to the guiding structure. Evaluated on standard benchmark datasets, MaskSketch outperforms state-of-the-art methods for sketch-to-image translation, as well as unpaired image-to-image translation approaches.

Citation

@inproceedings{bashkirova@masksketch,

author = {Bashkirova, Dina and Lezama, Jose and Sohn, Kihyuk and Saenko, Kate and Essa, Irfan },

title = {MaskSketch: Unpaired Structure-guided Masked Image Generation},

howpublished = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year = {2023}

}